I rephrased the above problem in detail again I hope it gives more clarity in the problem I am facing

Problem Statement: Load JSON type data as a string or dict/json or map<STRING,STRING> into Tiger Graph and query the data using Tiger graph functions parse_json_object or parse_json_array or any alternatives.

Vertex Definition:

CREATE VERTEX example_TestMap(PRIMARY_ID id STRING, mapdata STRING, name STRING, mapdata_2 STRING) WITH STATS=“OUTDEGREE_BY_EDGETYPE”, PRIMARY_ID_AS_ATTRIBUTE=“true”

Data Source:

{

“company”: {

“name”: “TechCorp”,

“employees”: [

{

“id”: 1,

“name”: “John Doe”,

“position”: “Software Engineer”,

“skills”: [“Java”, “JavaScript”],

“projects”: [

{

“id”: “proj-001”,

“name”: “SmartApp”

}

]

}

]

}

}

create a csv file using python:

small_json_example ={

“company”: {

"name": "TechCorp",

"employees": [

{

"id": 1,

"name": "John Doe",

"position": "Software Engineer",

"skills": ["Java", "JavaScript"],

"projects": [

{

"id": "proj-001",

"name": "SmartApp"

}

]

}

]

}

}

Save Data as csv with ‘sep=’\t’,’

small_data_dict=json.dumps(small_json_example)

dkgSignalInterface_df[“json_column”] =small_data_dict // loading as a JSON string

dkgSignalInterface_df[“json_column_2”] =small_data_dict //loading as a dict/JSON

dkgSignalInterface_df[‘json_column’]=dkgSignalInterface_df[‘json_column’].apply(json.loads)

dkgSignalInterface_df[[‘json_column’,‘json_column_2’]].head(2)

o/p:

|

json_column |

json_column_2 |

| 0 |

{‘company’: {‘name’: ‘TechCorp’, ‘employees’: … |

{“company”: {“name”: “TechCorp”, “employees”: … |

| 1 |

{‘company’: {‘name’: ‘TechCorp’, ‘employees’: … |

{“company”: {“name”: “TechCorp”, “employees”: … |

#saved data as csv with ‘tab separated values’

dkgSignalInterface_df.to_csv(’/Workspace/Users/saisarath.vattikuti@intel.com/MLAMA-Datasets/dkgSignalInterface.tsv’,sep=’\t’,header=‘true’,index=False)

LOADING JOB:(python)

conn.gsql(’’’

use graph {0}

CREATE LOADING JOB file_load_job_dkgSignalInterfaceTest_5 FOR GRAPH {0} {{ DEFINE FILENAME MyDataSource;

LOAD MyDataSource TO VERTEX dkgSignalInterface_TestMap VALUES($0, $2,$3,$0) USING SEPARATOR="\t", HEADER=“true”, EOL="\n";

}}'''.format(graphName))

Loading JOB O/p:

results = conn.runLoadingJobWithFile(file_path, fileTag=‘MyDataSource’, jobName=‘file_load_job_dkgSignalInterfaceTest_5’,sep="\t")

print(results)

[{‘sourceFileName’: ‘Online_POST’, ‘statistics’: {‘sourceFileName’: ‘Online_POST’, ‘parsingStatistics’: {‘fileLevel’: {‘validLine’: 10}, ‘objectLevel’: {‘vertex’: [{‘typeName’: ‘dkgSignalInterface_TestMap’, ‘validObject’: 10}], ‘edge’: [], ‘deleteVertex’: [], ‘deleteEdge’: []}}}}]

In Tiger Graph file is saved as:

example data:

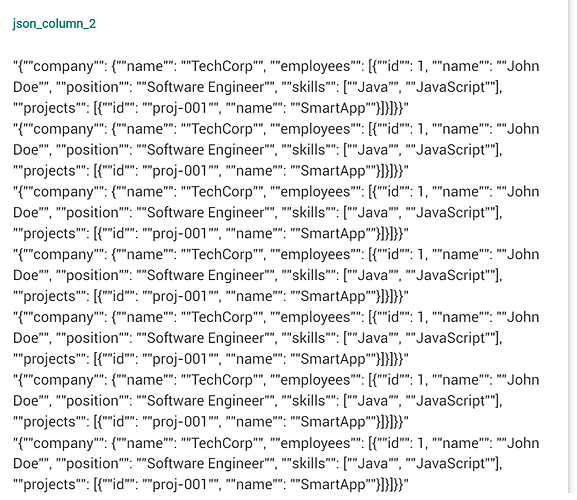

json_column_2

“{”“company”": {"“name”": ““TechCorp””, ““employees””: [{"“id”": 1, ““name””: ““John Doe””, ““position””: ““Software Engineer””, ““skills””: ["“Java”", ““JavaScript””], ““projects””: [{"“id”": ““proj-001"”, ““name””: ““SmartApp””}]}]}}”

“{”“company”": {"“name”": ““TechCorp””, ““employees””: [{"“id”": 1, ““name””: ““John Doe””, ““position””: ““Software Engineer””, ““skills””: ["“Java”", ““JavaScript””], ““projects””: [{"“id”": ““proj-001"”, ““name””: ““SmartApp””}]}]}}”

Read Queries in Tiger Graph:

CREATE QUERY searchAttributes_test_example_post() FOR GRAPH DKG_SPEC_LOAD_TEST{

ListAccum @@mapexample1;

ListAccum @@mapexample2;

JSONOBJECT jsstring;

JSONARRAY jsarray;

INIT = {dkgSignalInterface_TestMap.*};

// Get person p’s secret_info and portfolio

X = SELECT v FROM INIT:v

ACCUM @@mapexample1 += v.mapdata;

FOREACH item IN @@mapexample1 DO

// jsstring=parse_json_object(item);

print item;

break;

END;

// PRINT @@portf;

}

O/P:

[

{

“item”: “”{"“company”": {"“name”": ““TechCorp””, ““employees””: [{"“id”": 1, ““name””: ““John Doe””, ““position””: ““Software Engineer””, ““skills””: ["“Java”", ““JavaScript””], ““projects””: [{"“id”": ““proj-001"”, ““name””: ““SmartApp””}]}]}}”"

}

]

Error while trying to parse the data

CREATE QUERY searchAttributes_test_example_post() FOR GRAPH DKG_SPEC_LOAD_TEST{

ListAccum @@mapexample1;

ListAccum @@mapexample2;

JSONOBJECT jsstring;

JSONARRAY jsarray;

INIT = {dkgSignalInterface_TestMap.*};

// Get person p’s secret_info and portfolio

X = SELECT v FROM INIT:v

ACCUM @@mapexample1 += v.mapdata;

// PRINT @@mapexample1;

FOREACH item IN @@mapexample1 DO

jsstring=parse_json_object(item);

print item;

break;

END;

// PRINT @@portf;

}

jsstring=parse_json_object(item);

O/P:

Runtime Error: “{”“company”": {"“name”": ““TechCorp””, ““employees””: [{"“id”": 1, ““name””: ““John Doe””, ““position””: ““Software Engineer””, ““skills””: ["“Java”", ““JavaScript””], ““projects””: [{"“id”": ““proj-001"”, ““name””: ““SmartApp””}]}]}}” cannot be parsed as a json object.

Need help in resolving this issue

Questions:

1)How to load JSON structured data and query it, are there any best know practices to follow for loading the data into tiger graph and query it using the parse_json_object/parse_json_array functions.

2)Faced issues in loading the file to tiger graph as CSV.

3) Faced issues while loading the JSON/DICT type as a column in a CSV or TSV.

4) Is there any BKM for the above 2 and 3 issues?

Any Help will be much Appreciated

Thanks,

Sai Sarath Vattikuti.