Is there any way to add files on TigerGraph cloud using pyTG?

I have few csv files on Github and would like to load them in Vertex/Edge. I couldn’t find any gsql command or pyTG function which can add these files to TG cloud.

I tried loading github files in pandas dataframe and then using pyTG’s updateVertexDataFrame() but this doesn’t work when files/dataframes have some columns which contain blank/null values.

@mt_nagarro Unfortunately, pyTG doesn’t have the features to upload files into TG cloud at this moment. It might be included in the future, but we would need to evaluate a process to do this without having full access to the underlying servers.

You mentioned exploring updateVertexDataFrame() and I’ve run into that same exact issue. I’m attaching my notebook that I’m retrieving files from a GitHub Repo and then using that function (after doing some data cleaning). I’m hoping it can help!

@mt_nagarro I’ve attached an updated Colab that covers bulk uploads using loading jobs to the cloud via pyTigerGraph. I think this will solve your use case.

https://colab.research.google.com/drive/1fJpcv-q0NLfHj3X1k6Lbwddp8gVVcfES?usp=sharing

Supporting video: https://youtu.be/2BcC3C-qfX4?t=1945

Hey Jonathan (et al)

I am still having trouble getting this work for multiple files in the same load.

I get a successful load:

Job “Pantopix.load_job_vertices_0_10000_csv_1640275246100.file.m1.1640342568217” loading status

[RUNNING] m1 ( Finished: 0 / Total: 1 )

Job “Pantopix.load_job_vertices_0_10000_csv_1640275246100.file.m1.1640342568217” loading status

[FINISHED] m1 ( Finished: 1 / Total: 1 )

[LOADED]

±--------------------------------------------------------------------------------------------------------------------------+

| FILENAME | LOADED LINES | AVG SPEED | DURATION|

|/home/tigergraph/tigergraph/data/gui/loading_data/vertices_20002-30002.json.csv | 10001 | 91 kl/s | 0.11 s|

±--------------------------------------------------------------------------------------------------------------------------+

[Finished in 32.5s]

(after manually putting the files into tigergraph)

But I have multiple files of the same type that I want to load all of using PyTigerGraph.

My Python code looks like this:

token = tg.TigerGraphConnection(host=hostname, graphname=graph).getToken(secret, “<token_lifetime>”)[0]

conn = tg.TigerGraphConnection(host=hostname, graphname=graph, password=password, apiToken=token)

dataSource=’"/home/tigergraph/tigergraph/data/gui/loading_data/vertices_20002-30002.json.csv"’

print(dataSource)

gsqlQuery=’’’

USE GRAPH Pantopix

RUN LOADING JOB load_job_vertices_0_10000_csv_1640275246100 USING MyDataSource=’’’+dataSource+’’’

‘’’

print(gsqlQuery)

results = conn.gsql(gsqlQuery)

print(results)

But that filename isn’t explicitly mapped in the GUI. But does that mean I have to explicitly create maps for ALL my files (yikes) in the GUI, or do I use a generic “loader” file name that I have to change my filenames in a loop through all the files I want to load?

I hope that makes sense.

Frank

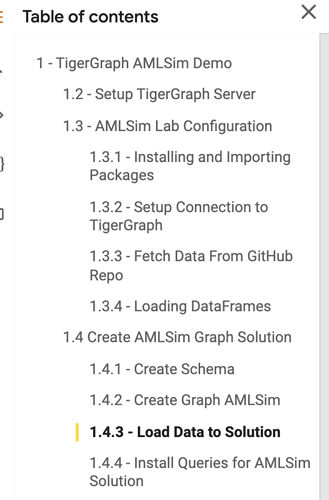

@fjblau You are back!!! We missed you over here! Let’s catch up. I’m attaching a python notebook that has some sample data loading code chunks. Navigate to section 1.4.3. Feel free to browse all the other sections as well. Let me know if that helps.

I tried the one at:

But it never loads the files into the graph. It does LOAD them and I get the right row count, but there are about 40 files that I need to load with the same job. Is there an example of loading a whole directory of files with PyTigerGraph?

Frank

When I try and load the file using PyTigerGraph:

localFilePath=’/Users/frankblau/pantopix/data/’+file.name+’.csv’

print(“Local:”, localFilePath)

dataSource=’/home/tigergraph/tigergraph/data/gui/loading_data/’+file.name+’.csv’

print(dataSource)

results = conn.uploadFile(localFilePath, "MyDataSouce", "load_job_vertices_0_10000_csv_1640275246100")

I get the error:

(‘Exception in OnFinish: GetResult index out of range, index: 0 | response_datas_.size()0’, ‘REST-10005’)

OK, I fixed that error with the double quotes… but now I am getting:

Local: "/Users/frankblau/pantopix/data/vertices_420042-430042.csv"

/home/tigergraph/tigergraph/data/gui/loading_data/vertices_420042-430042.csv

Upload: None

Run: Using graph 'Pantopix'

Semantic Check Fails: File or directory 'DataRoot/gui/loading_data/vertices_420042-430042.csv' does not exist!

Semantic Check Fails: The FILENAME = 'm1:/home/tigergraph/tigergraph/data/gui/loading_data/vertices_420042-430042.csv' is not in a valid path format.

when I try and run the job after uploading it. FWIW, I am also getting a “None” result on the upload:

localFilePath='"/Users/frankblau/pantopix/data/'+filename+'"'

print("Local:", localFilePath)

dataSource='/home/tigergraph/tigergraph/data/gui/loading_data/'+filename

print(dataSource)

results = conn.uploadFile(localFilePath, "MyDataSouce", "load_job_vertices_0_10000_csv_1640275246100")

print("Upload:", results)Hi Frank !

Thanks for reaching out , regarding the GSQL way for loading the file within the TigerGraph Cloud instance you need to change the MyDataSource by filevar

so that the gsqlQuery becomes :

localFilePath="/Users/frankblau/pantopix/data/"+file.name+".csv"

print("Local:", localFilePath)

filePathInTG="/home/tigergraph/tigergraph/data/gui/loading_data/"+file.name+".csv"

print(filePathInTG)

gsqlQuery="""

USE GRAPH Pantopix

RUN LOADING JOB load_job_vertices_0_10000_csv_1640275246100 USING filevar="""+filePathInTG+"""

"""

if you’re willing to use pyTigerGraph uploadFile function , what you did should work fine :

results = conn.uploadFile(localFilePath, "MyDataSouce", "load_job_vertices_0_10000_csv_1640275246100")

it might be that the file exceeds your nginx max upload size. Could you share with me the loading job and the file size ?